When textless natural language processing (NLP) initially emerged, the primary concept involved training a language model on sequences of learnable, discrete units instead of relying on transcribed text. This approach aimed to enable NLP tasks to be directly applicable to spoken utterances. Moreover, in the context of editing speech, a model would need to modify individual words or phrases to align with a target transcript while maintaining the original, unaltered content of the speech. Currently, researchers are exploring the potential of developing a unified model for zero-shot text-to-speech and speech editing, marking a significant step forward in the field.

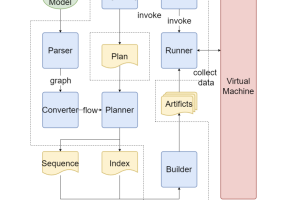

Recent research by the University of Texas at Austin and Rembrand present VOICECRAFT, an NCLM based on Transformers that generates neural speech codec tokens for infilling using autoregressive conditioning on bidirectional context. Voicecraft accomplishes state-of-the-art (SotA) results in zero-shot TTS and speech editing. The researchers build their approach on a two-stage token rearrangement process, including a delayed stacking step and a causal masking step. The proposed method allows autoregressive generation with bidirectional context and applies to speech codec sequences; it is based on the causal masking methodology, which the successful causal masked multimodal model inspired in joint text-image modeling.

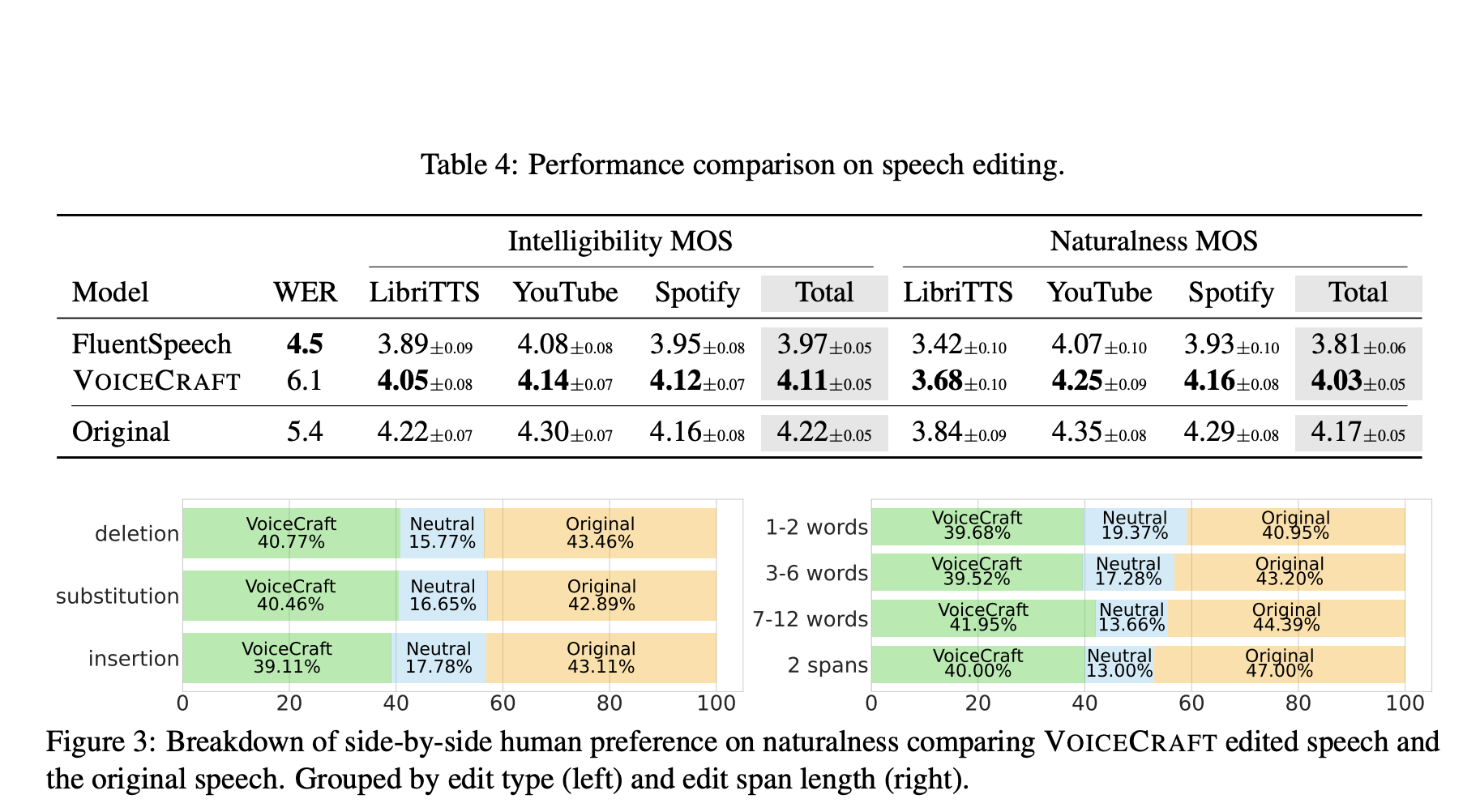

To further guarantee effective multi-codebook modeling, the team incorporates causal masking with delayed stacking as the suggested token rearrangement approach. The team created a unique, realistic, and difficult dataset called REALEDIT to test speech editing. With waveforms ranging from 5 seconds to 12 seconds in duration, REALEDIT includes 310 real-world voice editing samples collected from audiobooks, YouTube videos, and Spotify podcasts. The target transcripts are generated by editing the source speech transcripts to maintain their grammatical correctness and semantic coherence.

The dataset is structured to accommodate many editing scenarios, such as adding, removing, substituting, and modifying multiple spans at once, with modified text lengths varying from one word to sixteen words. Because of the recordings’ varied subject matter, accents, speaking styles, recording environments, and background noises, REALEDIT presents a greater challenge than popular speech synthesis assessment datasets like VCTK, LJSpeech, and LibriTTS, which offer audiobooks. Because of its diversity and realism, REALEDIT is a good barometer for the real-world applicability of voice editing models.

When compared to the previous SotA speech editing model on REALEDIT, VOICECRAFT performs far better in the subjective human listening tests. Most importantly, VOICECRAFT’s edited speech sounds almost identical to the original, unaltered audio. The results show that VOICECRAFT performs better than strong baselines, such as replicated VALL-E and the well-known commercial model XTTS v2 when it comes to zero-shot TTS and doesn’t require fine-tuning. The team used audiobooks and videos from YouTube in their dataset.

Despite VOICECRAFT’s progress, the team highlights some limitations, such as:

- The most notable occurrence during generation is the long periods of quiet followed by a scratching sound. The team accomplished this study by sampling many utterances and picking the shorter ones, but there should be more refined and effective ways.

- Another critical issue concerning the security of AI is the question of how to watermark and identify synthetic speech. There has been a lot of focus on watermarking and deepfake detection recently and many great strides forward.

However, with the advent of more sophisticated models like VOICECRAFT, the team believes that safety researchers face new opportunities and hurdles. They have made all of their code and model weights publicly available to help with research into AI safety and speech synthesis.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter with 24k+ members…

Don’t Forget to join our 40k+ ML SubReddit

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.