Research into computer architecture has a long history of producing simulators and tools for assessing and influencing computer system design. For instance, in the late 1990s, the SimpleScalar simulator was developed to let scientists test new microarchitecture concepts. Research in computer architecture has made great strides because of simulations and tools like gem5, DRAMSys, and many others. Since then, the discipline has advanced significantly thanks to the widespread availability of shared resources and infrastructure at the academic and business levels.

Industry and academia increasingly focus on machine learning (ML) optimization in computer architecture research to meet stringent domain-specific requirements. These include ML for computer architecture, ML for TinyML acceleration, DNN accelerator datapath optimization, memory controllers, power consumption, security, and privacy. Although previous work has shown the advantages of ML in design optimization, there are still obstacles to their adoption, such as the lack of robust, reproducible baselines, which prevent fair and objective comparison across different methodologies. Consistent development requires an appreciation for and joint assault on these obstacles.

The use of machine learning (ML) to simplify the process of exploring design space for domain-specific architectures has become widespread. While using ML to explore design space is tempting, doing so is fraught with difficulties:

- Finding the best algorithm in a growing library of ML techniques is difficult.

- There is no clear way to evaluate the approaches’ relative performance and sample efficiency.

- The adoption of ML-aided architecture design space exploration and the production of repeatable artifacts are hampered by the absence of a unified framework for fair, reproducible, and objective comparison across various methodologies.

To address these issues, Google researchers present ArchGym, a flexible and open-source gym that integrates numerous search techniques with building simulators.

Researching architecture with machine learning: Major challenges

There are many obstacles in the way of studying architecture with the help of machine learning.

No method exists to systematically determine the best machine learning (ML) algorithm or hyperparameters (e.g., learning rate, warm-up steps, etc.) for a given problem in computer architecture (e.g., identifying the best solution for a DRAM controller). Design space exploration (DSE) may now use a greater variety of ML and heuristic methods, from random walks to reinforcement learning (RL). While these techniques enhance performance noticeably above their chosen baselines, it is unclear if this is due to the optimization algorithms used or the set hyperparameters.

Computer architecture simulators have been essential to architectural progress, but there is a pressing concern about balancing precision, efficiency, and economy during the exploration phase. Depending on the specifics of the model used (e.g., cycle-accurate vs. ML-based proxy models), the simulators can provide vastly different performance estimates. Proxy models that are either analytical or ML-based are agile because they may ignore low-level features, yet, they typically have a high prediction error. In addition, commercial licensing can constrain how often a simulator can be used to collect data. In sum, these limitations’ performance vs. sample efficiency trade-offs impacts the optimization algorithm selected for design exploration.

Last but not least, the environment of ML algorithms is changing quickly, and certain ML algorithms rely on data to function properly. In addition, gaining insights into the design space is essential by visualizing the DSE output in relevant artifacts, such as datasets.

Design by ArchGym

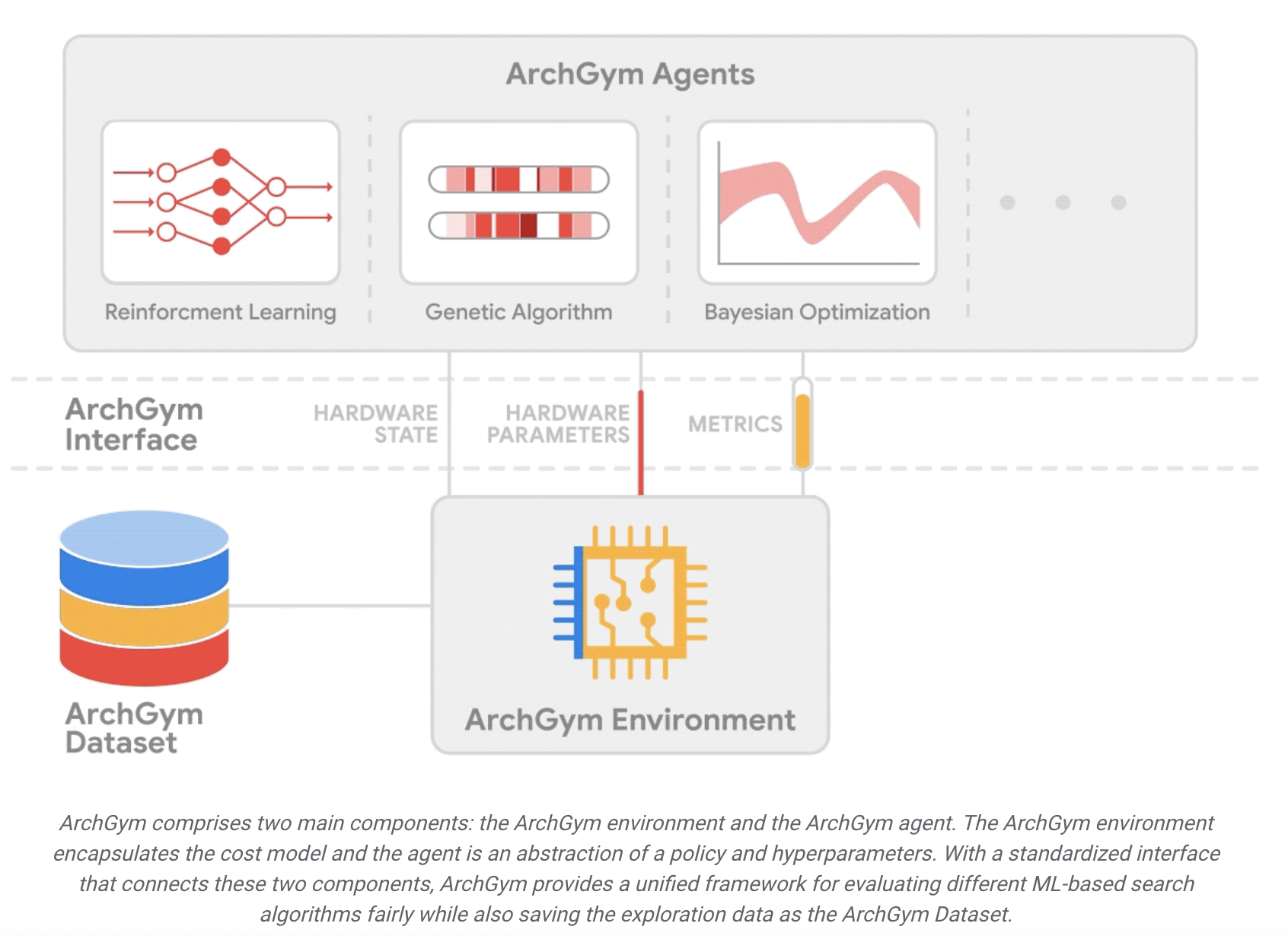

ArchGym solves these problems by giving us a uniform way to compare and contrast various ML-based search algorithms consistently. It has two primary parts:

1) The setting of the ArchGym

2) The employee of ArchGym

To calculate the computational cost of executing the workload given a set of architectural parameters, the environment encapsulates the architecture cost model and the desired workload(s). The agent contains the hyperparameters and the policies that direct the ML algorithm used in the search. The hyperparameters are integral to the algorithm for which the model is being optimized and can significantly impact the results. In contrast, the policy specifies how the agent should choose a parameter to optimize the goal over time.

ArchGym’s standardized interface joins these two parts, and the ArchGym Dataset is where all exploration information is stored. The three primary signals that make up the interface are the hardware’s status, parameters, and metrics. These signals are the minimum required to establish a reliable communication line between the agent and its surroundings. These signals allow the agent to monitor the hardware’s health and recommend adjusting its settings to maximize a (customer-specified) reward. The incentive is proportional to several measures of hardware efficiency.

Researchers use ArchGym to show empirically that at least one combination of hyperparameters yields the same hardware performance as other ML methods, and this holds across a wide range of optimization targets and DSE situations. A wrong conclusion about which family of ML algorithms is superior can be reached if the hyperparameter for the ML algorithm or its baseline is chosen arbitrarily. They demonstrate that various search algorithms, including random walk (RW), can find the optimal reward with suitable hyperparameter adjustment. However, remember that it may take a lot of work or luck to identify the optimal combination of hyperparameters.

ArchGym allows a common, extensible interface for ML architecture DSE and is available as open-source software. ArchGym also facilitates more robust baselines for computer architecture research problems and allows for fair and reproducible evaluation of various ML techniques. Researchers think it would be a huge step forward if researchers in the field of computer architecture had a place to gather where they could utilize machine learning to speed up their work and inspire new and creative design ideas.

Check out the Google Blog, Paper, and Github Link. Don’t forget to join our 26k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more. If you have any questions regarding the above article or if we missed anything, feel free to email us at Asif@marktechpost.com

🚀 Check Out 800+ AI Tools in AI Tools Club

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.