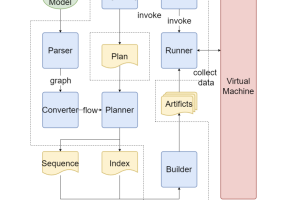

In this paper, we propose an algorithm to optimize a byte-level representation for end-to-end (E2E) automatic speech recognition (ASR). Byte-level representation is often used by large scale multilingual ASR systems when the character set of the supported languages is large. The compactness and universality of byte-level representation allow the ASR models to use smaller output and therefore, provides more flexibility. UTF-8 is the most commonly used byte-level representation and has been successfully applied to ASR. However, it is not designed for ASR or any machine learning tasks. By using auto-encoder and vector quantization, we show that we can optimize a byte-level representation for ASR and achieve better accuracy. Our proposed framework can incorporate information from different modalities and provide an error correction mechanism. In an English/Mandarin dictation task, we show that the bilingual ASR model built with this approach can outperform UTF-8 representation by 5{7df079fc2838faf5776787b4855cb970fdd91ea41b0d21e47918e41b3570aafe} relative in error rate.