Introduction

The field of Artificial Intelligence (AI) is full of new terminologies, many of which may seem complex at first glance. Understanding the key AI terminologies is not only essential for professionals but also for enthusiasts who want to stay updated with the advances in the technology world. In this article, we aim to provide a clear and concise introduction to some of the most important terms you will encounter in the realm of AI. This guide serves as an approachable yet comprehensive resource for understanding the concepts that are driving AI’s expansion.

What Is AI Terminology?

AI terminology refers to the specialized language used to describe various concepts, techniques, and processes within the realm of Artificial Intelligence. Words like “algorithm” or “neural network” are common in AI-related discussions, but they carry distinct meanings in this particular context. These terminologies are essential for defining the methodologies employed by researchers, developers, and engineers to implement AI.

Familiarizing oneself with AI jargon allows for a deeper understanding of the technology. Whether it’s understanding how an algorithm works or the significance of neural networks in mimicry of human intelligence, AI terminologies are the framework through which broader ideas are communicated. Given the diverse applications of AI, including healthcare, robotics, and entertainment, understanding these terminologies becomes critical in multiple fields.

AI language encompasses a wide range of areas such as Natural Language Processing (NLP), Machine Learning (ML), artificial neural networks, and more. While these terms are often used interchangeably, they each have specific distinctions that define their role within the broader AI ecosystem. For someone diving into the world of AI, it is paramount to grasp these terminologies to navigate the complex yet exciting terrain.

Also Read: Glossary of AI Terms

The Importance of Understanding AI Language

Learning and understanding AI terminologies is not just an academic necessity; it is critical for grasping the underlying technology that is reshaping the future. For developers, entrepreneurs, and anyone involved in tech, the ability to communicate ever-evolving ideas becomes easier when you’re fluent in AI jargon.

AI language is often perceived as confusing and overly technical, but possessing a strong understanding sheds light on how advancements like smart assistants or autonomous vehicles work. Not understanding these terms can limit one’s ability to contribute effectively in discussions or make informed decisions.

Mastering AI-specific terminologies allows individuals to maintain relevancy in their industries. Many corporations are adopting AI-driven solutions, requiring professionals to understand basic terms to contribute to brainstorming sessions, or decision-making processes. Moreover, the terminologies provide a framework for comparing different AI tools and predicting how these technologies may evolve, rendering a more informed opinion about the future of AI.

Key Terms: Algorithms, Models, and Neural Networks

At the heart of Artificial Intelligence are three pivotal concepts: algorithms, models, and neural networks. Each of these plays an instrumental role in AI’s functionality and its promise to revolutionize industries.

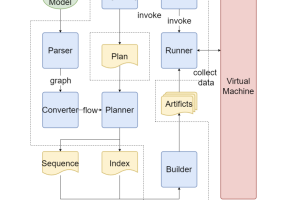

Algorithms in AI are essentially sets of rules that a machine follows to solve specific problems. These coded instructions aim to optimize mathematical models that train AI systems. For instance, an algorithm can function as the roadmap that leads an AI through vast datasets, teaching it how to make informed decisions or predictions with minimal human intervention.

Models are the theoretical representations derived from algorithms. Once an algorithm runs through datasets, the resulting patterns are encapsulated in models. Think of models as a blueprint, offering the system a reference point for learning and applying knowledge. Models can specialize in tasks such as recognizing speech, detecting images, or providing medical diagnoses.

Neural Networks in AI mimic the human brain’s structure, with layers of nodes representing neurons. These networks “learn” from vast amounts of data and perform tasks like object recognition and natural language processing. Neural networks can be either shallow or deep, with the latter involved in “deep learning,” which harnesses complex patterns within datasets. The hierarchical structure of nodes within neural networks helps machines understand context, whether that context is determining meaning in a sentence or identifying an object in an image.

Also Read: 50 AI Terms You Should Know

Machine Learning vs. Artificial Intelligence Terminology

Although Machine Learning (ML) is often used interchangeably with Artificial Intelligence (AI), it is essential to distinguish between these terms. AI is the broader concept of machines capable of performing functions that typically require human intelligence. Tasks such as facial recognition, recommendation algorithms, and even beating grandmasters in chess fall under AI’s purview. AI includes a variety of techniques and approaches, one of which is Machine Learning.

ML refers to the subset of AI where computers are programmed to learn from past data. Instead of explicitly coding the machine to execute a specific task, the machine learns by analyzing patterns in the data. This method results in solutions that are often far more accurate because they adapt based on feedback. For example, predictive search input recommendations happen because the machine learning algorithm studied an enormous batch of prior searches.

Machine learning relies heavily on a specific set of algorithms, and part of the confusion in AI terminology arises from the overlap between learning techniques and broader AI concepts. Deep learning is a popular example, often a branch of machine learning, powered by neural networks with many layers. Though machine learning is a part of AI, not all AI is machine learning, making it crucial to grasp this nuance in terms.

Also Read: Introduction to Long Short Term Memory (LSTM)

Deep Learning Vocabulary for Beginners

Deep Learning is a fascinating subfield of artificial intelligence, and for beginners, understanding the vocabulary used can seem challenging but rewarding. Part of what might confuse novices are terms such as “supervised learning,” “unsupervised learning,” “convolutional neural networks,” and others within this subset of AI.

Supervised learning refers to a form of deep learning where the model learns from labeled data. In such cases, the algorithm receives examples of both inputs and their corresponding outputs, which teaches it what to output when given new input data. On the other hand, in unsupervised learning, the system is fed unlabeled data and must group data points or identify features without specific instructions.

Another valuable term is Convolutional Neural Networks (CNNs), a type of neural network specifically designed for image recognition. These networks apply convolutions – mathematical transformations – to the data, making connections across local sections. CNNs are used extensively in computer vision tasks like facial recognition.

Recurrent Neural Networks (RNNs) are neural networks crafted for tasks where it makes sense to analyze sequences of data. RNNs are useful in scenarios like language translation, where understanding the order of words matters for generating coherent output. Unlike CNNs, RNNs come with memory, allowing them to use information from previous inputs to improve accuracy.

Also Read: Understanding Artificial Intelligence: A Beginner’s Guide

Technical AI Terms Demystified

Technical terminologies are often the most difficult aspect of learning Artificial Intelligence. As technologies evolve at a rapid rate, so do the intricacies of terminologies you need to be familiar with.

One common technical term you’ll encounter is backpropagation. This refers to the process used by neural networks to adjust weights after each piece of data is passed through. By measuring the difference between actual outputs and expected outputs, backpropagation helps fine-tune the network’s learning and make it more accurate over time.

Another term is gradient descent, which is an optimization algorithm used to minimize the error difference in machine learning models. Gradient descent works iteratively, improving the model’s accuracy by reducing the “loss” or error in predictions made against actual values. Both backpropagation and gradient descent are instrumental in AI models, enhancing precision and efficiency.

Overfitting is another key term in AI’s technical sphere. Overfitting happens when a model learns too well on training data but struggles when tested on new data, making it inefficient for broader application. It’s one of the common challenges in Machine Learning that researchers and engineers are constantly working to address.

AI Buzzwords in Industry Applications

The rise of AI has brought with it a variety of buzzwords that permeate industry discussions and marketing materials. Leaders in healthcare, automotive, and education are keen to embrace these innovations, often using buzzwords that oversimplify the AI tools they adopt.

Cognitive computing is one such buzzword. Broadly, it refers to systems capable of mimicking human cognition, but in reality, it often consists of complex AI and ML systems designed to enhance decision-making capabilities in specific use cases.

Autonomous systems is another term that can apply to several layers of AI technology but is often used to refer to self-operating machines like self-driving cars or drones. Frequently heard in industries requiring automation, it speaks to the substantial use of AI algorithms for navigation and decision-making without human intervention.

Similarly, NLP (Natural Language Processing) is frequently heard in discussions about AI’s role in improving human-machine interaction. NLP allows machines to “understand” and respond to human language, transforming industries such as customer support and personal assistants like Alexa or Siri.

Jargon-Free Explanation of AI Terms

AI often comes with intimidating jargon, but these concepts can be broken down in a way that makes them more accessible. At its most basic, Artificial Intelligence simply means a machine’s ability to do things that typically require human smarts, like making decisions or sorting information.

Machine Learning, one of AI’s child technologies, refers to machines improving their performance based on past experiences, just like humans get better at tasks through practice. Task completion becomes faster and more accurate with more time and data feeding into the system.

Finally, don’t be afraid of the term neural networks. These networks simply simulate the way neurons convert information into signals in the human brain. Once trained, these networks can recognize patterns – detecting fraud, identifying faces, or even diagnosing diseases.

How Understanding AI Terms Improves Learning

Understanding terms associated with AI can drastically improve your ability to learn about even more advanced topics as you progress. When AI terminology is mastered, even complex reports, research papers, and cutting-edge trends make more sense, enabling a more fulfilling exploration of AI.

This improved understanding facilitates smoother communication with colleagues or mentors in the AI community, bringing better collaboration and faster innovation. Not only will you become more proficient at reading technical articles, but current trends in AI will also be more relatable to your daily experiences, whether you work in an AI-related field or use AI products in everyday life.

By building a strong vocabulary foundation, learners ultimately begin to predict what’s happening next in this continuously evolving field. Every term learned is another step toward mastering the art and science of Artificial Intelligence.

Essential Glossary for AI Enthusiasts

To assist AI enthusiasts in their journey, building a glossary of important AI terms is indispensable. Start by cataloging frequent keywords like “algorithms,” “supervised learning,” and “convolutional networks.” Be sure to consult resources that delve into newer advancements like Transfer Learning, Quantum Computing, and reinforcement learning.

Keeping a glossary not only aids memory retention but also makes it easier to explain the significance of these terms to beginners interested in AI. Over time, by revisiting these terms and seeing them in practical contexts, enthusiasts build a deeper comprehension of how AI functions.

A foundational glossary doesn’t just make learning smoother—it provides the confidence to experiment and develop one’s projects. Understanding basic and semi-advanced terms empowers future AI leaders to push the boundaries of this transformative technology.

Conclusion

Mastering AI terminologies is key to understanding complex Artificial Intelligence systems that drive the future. Whether you’re a beginner or a seasoned professional, building a firm grasp on keywords like “algorithms,” “models,” and “neural networks” allows you to connect with the enormous possibilities AI offers. Gaining knowledge of AI-related jargon makes the learning experience richer and aligns with the ongoing evolution of technology, bringing immense opportunities in various sectors. The more proficient you become in using AI terminology, the easier it becomes to stay updated with the rapid developments of the field.

References

Russell, Stuart J., and Peter Norvig. Artificial Intelligence: A Modern Approach. 3rd ed., Pearson, 2010.

Géron, Aurélien. Hands-On Machine Learning with Scikit-Learn and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems. O’Reilly Media, 2017.

Goodfellow, Ian, et al. Deep Learning. MIT Press, 2016.

Chollet, François. Deep Learning with Python. 2nd ed., Manning Publications, 2021.

Nielsen, Michael. Neural Networks and Deep Learning: A Textbook. Determination Press, 2015.