Since the rollout of ChatGPT, companies in most every industry are looking to leverage the text generation and retrieval capabilities made possible by large language models. Well known LLMs like GPT, Bard and LLaMA have been trained on a massive corpus of data, where data sets are internet scale and made up of publicly available data, and with parameters that number in the billions.

At such scale, it’s not surprising that today’s LLMs are energy intensive and expensive to run, but they don’t have to be. According to research from the expert.ai R&D team, lightweight models can obtain the high performance levels that companies expect, but at significantly lower costs.

The Cost of LLMs

Every calculation that a large language model performs in response to a query incurs a cost in terms of computing power, largely because of the huge number of parameters at work and the computing cost incurred to run it. But that’s just part of the cost of using an LLM.

Even when released as pre-trained models, LLMs still require additional fine tuning to meet real-world enterprise requirements. That’s because most models are trained on internet repositories like Wikipedia and other publicly available data sources and not the domain-specific data that will be functional for businesses and the use cases where they will be applied. Retraining and tuning can be expensive, especially when you consider that it could take multiple training cycles to get the results you need. The financial costs are significant—$500,000 to more than $4 million to train a model like GPT-4 according to one estimate—add to that the costs for compute instances and talent to train or manage the necessary fine tuning.

In addition to the financial costs, companies who have established climate and ESG goals and are tracking their carbon emissions will also find LLMs expensive and at odds with these commitments. The carbon emissions of GPT-3, for example, have been estimated at 1,287,000 kilowatt-hours), or 552 metric tons.

According to a recent study by S&P, this does present a challenge for companies: “More than two-thirds (68{7df079fc2838faf5776787b4855cb970fdd91ea41b0d21e47918e41b3570aafe}) of respondents indicate they are concerned about the impact of AI/ML on their organization’s energy use and carbon footprint.”

Therefore, being able to have a solution that is both effective, but less resource intensive, will prove vital for the balance sheet across the board.

LLMs: Can Accuracy and Energy Efficiency Coexist?

While much of the research around LLMs focuses on accuracy, there is less focus on how to make them more efficient in terms of energy use and costs. Our research team said: why not look at those factors together?

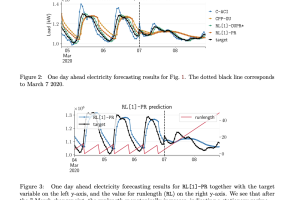

We combined the analysis of performance and energy consumption, costs and carbon footprint to see how to achieve the best of each in a real business scenario.

Our research found that that lightweight models can obtain the high performance levels that companies expect, but at significantly lower costs.

The results indicate that very often, the simplest techniques achieve performance very close to that of large LLMs but with very low power consumption and lower resource demands.

The findings are published in our paper, “An Energy-Based Comparative Analysis of Common Approaches to Text Classification in the Legal Domain,” which was presented at the 4th International Conference on NLP & Text Mining (NLTM 2024) held earlier this year in Copenhagen.

Looking Forward

Companies are taking many factors into consideration as they choose AI solutions for their business challenges. The opportunities that LLMs offer for efficiency and innovation are not insignificant, but the costs and challenges for data, bias and security are still barriers that need to be addressed. From our experience, many companies are taking a thoughtful approach to gain a deeper understanding of the capabilities available, the realities of deployment and the full range of options for delivering the value they are looking for.

Continued experimentation with the variety of AI tools available will pave the way for ever more efficient, effective and responsible AI adoption.