Mastering the Art of Hyperparameter Tuning: Tips, Tricks, and Tools

Image by Anthony on Pexels

Machine learning (ML) models contain numerous adjustable settings called hyperparameters that control how they learn from data. Unlike model parameters that are learned automatically during training, hyperparameters must be carefully configured by developers to optimize model performance. These settings range from learning rates and network architectures in neural networks to tree depths in decision forests, fundamentally shaping how models process information.

This article explores essential methods and proven practices for tuning these critical configurations to achieve optimal model performance.

What are Hyperparameters?

In ML, hyperparameters are like the buttons and gears of a radio system or any machine: these gears can be adjusted in multiple ways, influencing how the machine operates. Similarly, an ML model’s hyperparameters determine how the model learns and processes data during training and inference, affecting its performance, accuracy, and speed in optimally performing its intended task.

Importantly, as stated above, parameters and hyperparameters are not the same. ML model parameters — also called weights — are learned and adjusted by the model during training. This is the case of coefficients in regression models and connection weights in neural networks. In contrast, hyperparameters are not learned by the model but are set manually by the ML developer before training to control the learning process. For instance, several decision trees trained under different hyperparameter settings for their maximum depth, splitting criterion, etc., may yield models that look and behave differently, even when they are all trained on identical datasets.

Difference between parameters and hyperparameters in ML models

Image by Author

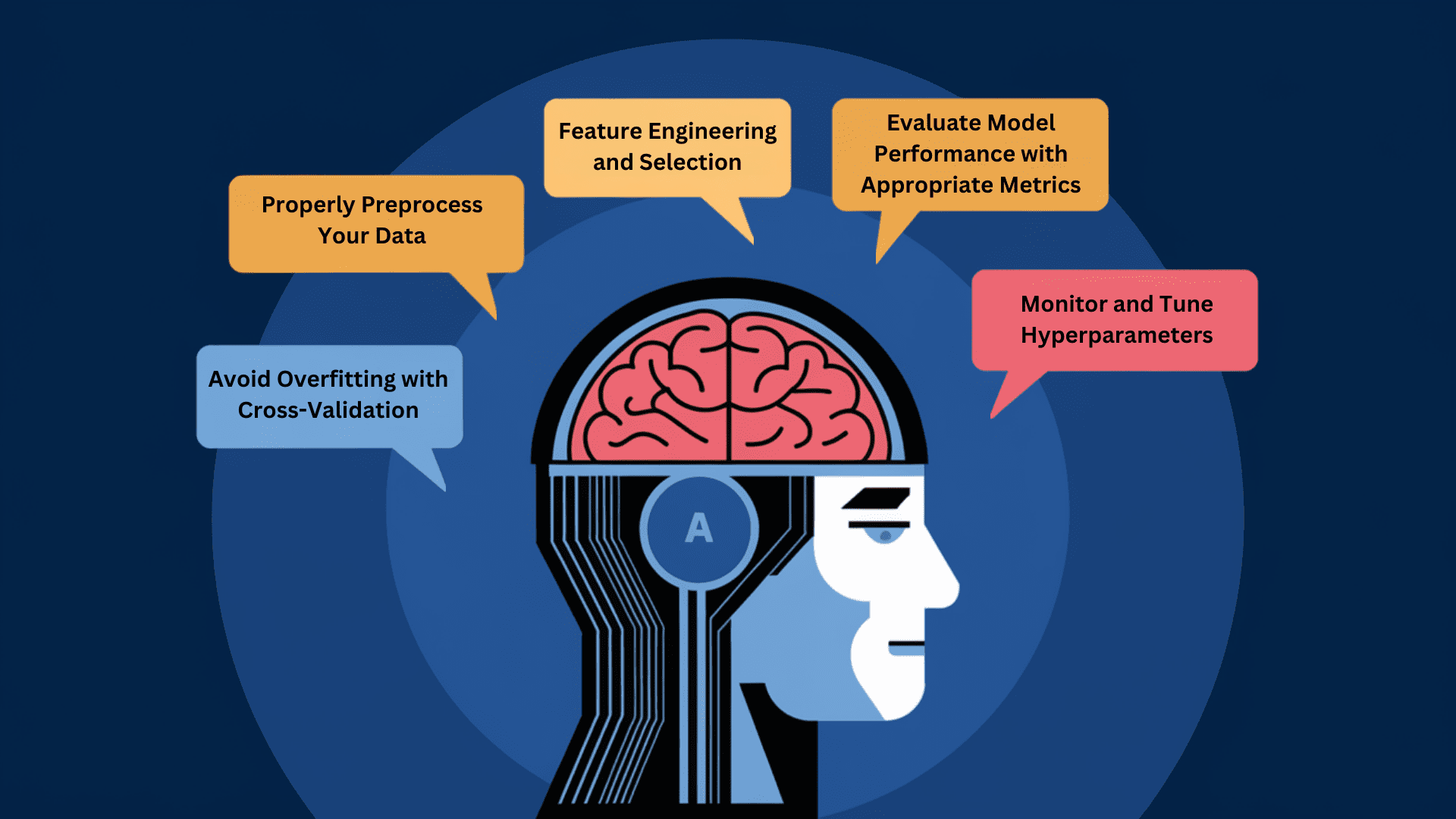

Tuning Hyperparameters: Tips, Tricks and Tools

As a rule of thumb, the more sophisticated an ML model, the wider the range of hyperparameters that shall be adjusted to optimize its behavior. Unsurprisingly, deep neural networks are among the model types with the most different hyperparameters to look after — from learning rate to number and type of layers to batch size, not to mention activation functions, which heavily influence nonlinearity and the capability to learn complex but useful patterns from data.

So, the question arises: How do we find the best setting for the hyperparameters in our model, when it sounds like finding a needle in a haystack?

Finding the best “version” of our model requires evaluating its performance based on metrics, hence it takes place as part of the cyclic process of training, evaluating, and validating the model, as shown below.

Within ML systems lifecycle, hyperparameter tuning takes place during model training and evaluation

Image by Author

Of course, when there are several hyperparameters to play with, and each one may take a range of possible values, the number of possible combinations — the positions in which all buttons in the radio system can be adjusted — can quickly become very large. Training every possible combination may be unaffordable in terms of cost and time invested, hence better solutions are needed. In more technical words, the search space becomes immense. A common tool to perform this daunting optimization task more efficiently is by applying search processes. Two common search techniques for hyperparameter tuning are:

- Grid search: this method exhaustively searches through a manually specified subset of the hyperparameter space, by testing all possible combinations within that subset. It reduces the burden of trying different regions of the search space, but may still become computationally expensive when dealing with many parameters and values per parameter. Suppose for instance a neural network model on which we’ll try tuning two hyperparameters: learning rate, with the values, 0.01, 0.1, and 1; and batch size, with the values 16, 32, 64, and 128. A grid search would evaluate 3 × 4 = 12 combinations in total, training 12 versions of the model and evaluating them to identify the best-performing one.

- Random search: random search simplifies the process by sampling random combinations of hyperparameters. It’s faster than grid search and often finds good solutions with less computational cost, particularly when some hyperparameters are more influential in model performance than others

Besides these search techniques, other tips and tricks to consider to further enhance the hyperparameter tuning process include:

- Cross-validation for more robust model evaluation: Cross-validation is a popular evaluation approach to ensure your model is more generalizable to future or unseen data, providing a more reliable measure of performance. Combining search methods with cross-validation is a very common approach, even though it means even more rounds of training and time invested in the overall process.

- Gradually narrow down the search: start with a coarse or broad range of values for each hyperparameter, then narrow down based on initial results to further analyze the areas around the most promising combinations.

- Make use of early stopping: in very time-consuming training processes like those in deep neural networks, early stopping helps stop the process when performance barely keeps improving. This is an effective solution against overfitting problems. Early stopping threshold can be deemed as a special kind of hyperparameter that can be tuned as well.

- Domain knowledge to the rescue: leverage domain knowledge to set realistic bounds or subsets for your hyperparameters, guiding you to the most sensible ranges to try from the start and making the search process more agile.

- Automated solutions: there are advanced approaches like Bayesian optimization to intelligently optimize the tuning process by balancing exploration and exploitation, similar to some reinforcement learning principles like bandit algorithms.

Hyperparameter Examples

Let’s have a look at some key Random Forest hyperparameters with practical examples and explanations:

⚙️ n_estimators: [100, 500, 1000]

- What: Number of trees in the forest

- Example: With 10,000 samples, starting at 500 trees often works well

- Why: More trees = better generalization but diminishing returns; monitor OOB error to find sweet spot

⚙️ max_depth: [10, 20, 30, None]

- What: Maximum depth of each tree

- Example: For tabular data with 20 features, start with

max_depth=20 - Why: Deeper trees capture more complex patterns but risk overfitting;

Nonelets trees grow until leaves are pure

⚙️ min_samples_split: [2, 5, 10]

- What: Minimum samples required to split node

- Example: With noisy data,

min_samples_split=10can help reduce overfitting - Why: Higher values = more conservative splits, better generalization on noisy data

⚙️ min_samples_leaf: [1, 2, 4]

- What: Minimum samples required in leaf nodes

- Example: For imbalanced classification,

min_samples_leaf=4ensures meaningful leaf predictions - Why: Higher values prevent extremely small leaf nodes that might represent noise

⚙️ bootstrap: [True, False]

- What: Whether to use bootstrapping when building trees

- Example: False for small datasets (Why:

Trueenables out-of-bag error estimation but uses only ~63% of samples per tree

Wrapping Up

By implementing systematic hyperparameter optimization strategies, developers can significantly reduce model development time while improving performance. The combination of automated search techniques with domain expertise enables teams to efficiently navigate vast parameter spaces and identify optimal configurations. As ML systems grow more complex, mastering these tuning approaches becomes increasingly valuable for building robust and efficient models that deliver real-world impact, no matter how complex the task may appear.