How do you envision the role of data engineering evolving with the advancements in AI and automation over the next five years?

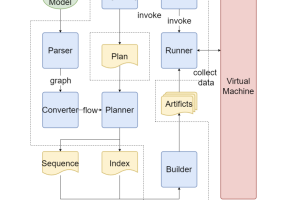

In the next five years, I foresee data engineering leaping forward with greater integration with AI. This integration will automate data quality checks, anomaly detection, and real-time decision-making processes, revolutionizing the field. A practical application already in place involves automated data quality frameworks that monitor and rectify discrepancies in our live data streams, enhancing accuracy by 40{7df079fc2838faf5776787b4855cb970fdd91ea41b0d21e47918e41b3570aafe}. This shift not only improves operational efficiency but also opens up new possibilities for engineers to focus on strategic initiatives like developing predictive analytics models that forecast market trends and customer behavior, sparking excitement and optimism in our audience about the future of data engineering.

During your tenure at AWS, what were some of the key challenges you faced while guiding Fortune 500 firms in cloud data warehouse migrations, and how did you overcome them?

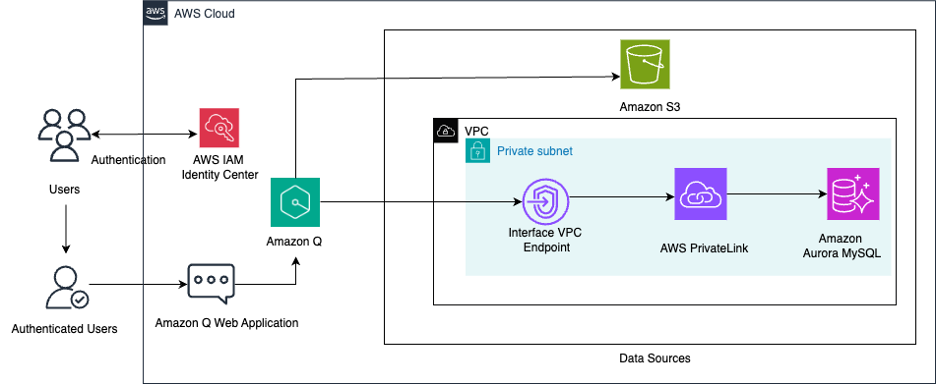

I had a fantastic experience working with the innovative clients of Amazon Web Services. One major challenge at AWS was facilitating the secure and efficient migration of legacy data systems from on-prem data centers and other traditional data warehouses to the cloud for clients like Merck. We managed this by implementing a multi-phased approach, including preliminary data assessment, secure data transfer protocols, and rigorous post-migration testing. For instance, for Merck, this approach reduced their operational downtime by 75{7df079fc2838faf5776787b4855cb970fdd91ea41b0d21e47918e41b3570aafe} during migration and cut their data management costs by half, demonstrating the effectiveness of our strategic methodology.

Can you discuss the innovative data pipelines you’ve architected at Airbnb and how they support the company’s trust and safety initiatives?

Airbnb‘s innovative data pipelines incorporate end-to-end data quality tests that serve as the backbone of the high-quality data at Airbnb. For example, we developed a pipeline integrating image recognition technology to automatically review and verify property photos against listed amenities, improving listing accuracy and preventing fake inventory on the platform. This technology has increased trust in our platform, evidenced by a 10{7df079fc2838faf5776787b4855cb970fdd91ea41b0d21e47918e41b3570aafe} improvement in guest reviews regarding listing accuracy.

What are the essential components of a robust, scalable data platform for enterprise analytical and machine learning systems, based on your experience?

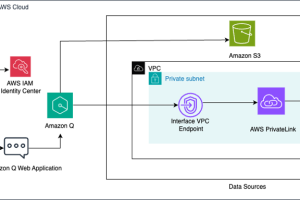

In my experience scalablable and robust data pipelines include sophisticated management and monitoring capabilities. Scalable data storage solutions like Amazon S3, efficient data processing services such as Apache Spark for handling large datasets, and dynamic data ingestion systems like Apache Kafka form the backbone of our data infrastructure at Airbnb. These technologies enable our systems to scale effectively during periods of peak demand, such as the summer travel season, ensuring that we can handle significant increases in data influx without degradation in performance.

To further enhance the robustness and scalability of our data platform, we incorporate rigorous Service Level Agreement (SLA) management to ensure that our data services meet the performance standards required by business operations. Additionally, we employ an advanced alert management system that monitors our data pipelines for failed tasks, automatically triggering corrective actions without human intervention. We also embed data quality checks within each task to ensure high data integrity and utilize anomaly detection algorithms to monitor for data drift, which helps identify potential issues that could affect analytical accuracy or machine learning model performance early. These layers of functionality are critical to maintaining a high-performing, reliable, and secure data environment that supports our dynamic business needs.

How do you balance the demands of maintaining data governance while pushing the boundaries of data innovation and AI at Airbnb?

At Airbnb, we carefully balance the demands of maintaining strict data governance with the drive for innovation in AI and data analytics. We implement a sophisticated tiered data access model that precisely controls who can access data based on their role and the sensitivity of the data. We reinforce this model by automated scripts that anonymize sensitive user data before it’s made available for analysis, ensuring compliance with international data protection regulations such as GDPR. These practices allow our data scientists and engineers to explore innovative data-driven solutions within a secure and compliant framework, fostering a culture of responsible innovation.

Furthermore, we enhance this balance through a structured approach to monitoring and improving data governance metrics. We establish top-level Objectives and Key Results (OKRs) that focus on data quality, data operations metrics, and overall governance. These OKRs are designed to align various teams across Airbnb, enabling them to prioritize decisions and actions that keep our data management practices on track. Regular reviews of these OKRs help us identify areas for improvement and drive enhancements in our data systems. This structured metric-driven approach ensures that while we push the boundaries of what’s possible with data science and artificial intelligence, we never compromise on the integrity and security of our data, thus maintaining trust with our users and stakeholders.

At several Data analytics and AI conferences, you’ve shared your insights. What are some emerging trends in data engineering that you believe will significantly impact the industry?

A particularly impactful trend in data engineering is adopting tools and frameworks that gather information about the data’s lineage from the source to the end-user systems, including column-level lineage. For example, companies of all sizes can perform an impact analysis of an upstream data change on the downstream systems, thus avoiding potential data quality issues that get caught always after they happen. This advancement will enable data and analytics teams to support their stakeholders with high-quality data and provide lineage information.

Could you elaborate on a particularly challenging project you led at Airbnb and how it has shaped your approach to data engineering and AI?

A challenging yet rewarding project involved significantly redesigning our data processing workflows to integrate real-time data feeds from multiple sources. This initiative required rearchitecting our existing batch processing systems to handle streaming data using cutting-edge technologies like Apache StarRocks. The result was a remarkable 90{7df079fc2838faf5776787b4855cb970fdd91ea41b0d21e47918e41b3570aafe} reduction in data latency and a more responsive fraud detection system, fundamentally transforming how we handle data at scale. This project’s success is a testament to our team’s expertise and commitment to pushing the boundaries of data engineering.

How do you foster a culture of innovation and continuous learning within your team at Airbnb, especially in the fast-evolving fields of data engineering and AI?

We employ a variety of hands-on and collaborative activities designed to promote creative problem-solving and skill development. I regularly organize boot camps and skill-building workshops that cover new technologies and methodologies, helping our team stay ahead of the curve.

We have instituted bi-annual “Alert-a-thons,” where team members collaboratively review and analyze existing offline data pipeline alerts. These sessions are crucial for identifying and tuning the alerts to enhance the quality and relevance of the notifications our systems generate. By engaging in these Alert-a-thons, our engineers gain deeper insights into the operational aspects of our data infrastructure. They are encouraged to think critically about how to improve our systems.