LLMs are expanding beyond their traditional role in dialogue systems to perform tasks actively in real-world applications. It is no longer science fiction to imagine that many interactions on the internet will be between LLM-powered systems. Currently, humans verify LLM-generated outputs for correctness before implementation due to the complexity of code comprehension. This interaction between agents and software systems opens avenues for innovative applications. For instance, an LLM-powered personal assistant could inadvertently send sensitive emails, highlighting the need to address critical challenges in system design to prevent such errors.

The challenges in ubiquitous LLM deployments encompass various facets, including delayed feedback, aggregate signal analysis, and the disruption of traditional testing methodologies. Delayed signals from LLM actions hinder rapid iteration and error identification, necessitating asynchronous feedback mechanisms. Aggregate outcomes become critical in evaluating system performance, challenging conventional evaluation practices. Integration of LLMs complicates unit and integration testing due to dynamic model behavior. Variable latency in text generation affects real-time systems, while safeguarding sensitive data from unauthorized access remains paramount, especially in LLM-hosted environments.

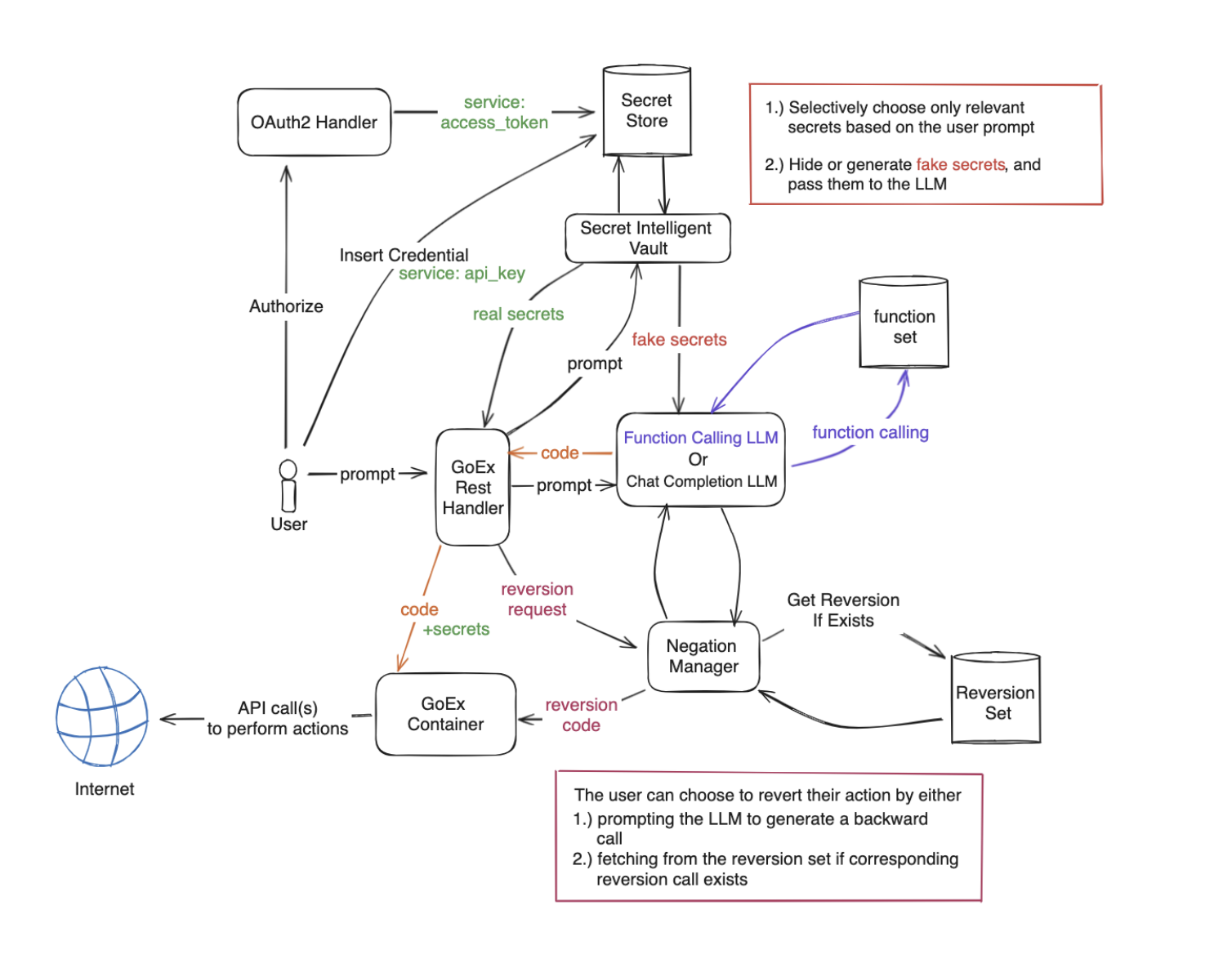

The researchers from UC Berkeley propose the concept of “post-facto LLM validation” as an alternative to “pre-facto LLM validation.” In this approach, humans arbitrate the output produced by executing LLM-generated actions rather than evaluating the process or intermediate outputs. While this method poses risks of unintended consequences, it introduces the notions of “undo” and “damage confinement” to mitigate such risks. “Undo” allows LLMs to retract unintended actions, while “damage confinement” quantifies user risk tolerance. They developed Gorilla Execution Engine GoEx, a runtime for executing LLM-generated actions, utilizing off-the-shelf software components to assess resource readiness and support developers in implementing this approach.

GoEx introduces a runtime environment for executing LLM-generated actions securely and flexibly. It features abstractions for “undo” and “damage confinement” to accommodate diverse deployment contexts. GoEx supports various actions, including RESTful API requests, database operations, and filesystem actions. It relies on a DBManager class to provide database state information and access configuration securely to LLMs without exposing sensitive data. Credentials are stored locally to establish connections for executing operations initiated by the LLM.

The key contributions of this paper are the following:

- The researchers advocate for integrating LLMs into various systems, envisioning them as decision-makers rather than data compressors. They highlight challenges like LLM unpredictability, trust issues, and real-time failure detection.

- They propose “post-facto LLM validation” to ensure system safety by validating outcomes rather than processes.

- Introducing “undo” and “damage confinement” abstractions to mitigate unintended actions in LLM-powered systems.

- They present GoEx, a runtime facilitating autonomous LLM interactions, prioritizing safety while enabling utility.

In conclusion, this research introduces “post-facto LLM validation” for verifying and reverting LLM-generated actions alongside GoEx, a runtime with undo and damage confinement features. These aim to ensure the safer deployment of LLM agents. They highlight the vision of autonomous LLM-powered systems and outline open research questions. It anticipates a future where LLM-powered systems can interact independently with minimal human verification, advancing towards autonomous tool and service interactions.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here