The below is a summary of my recent article on the rise of superintelligence.

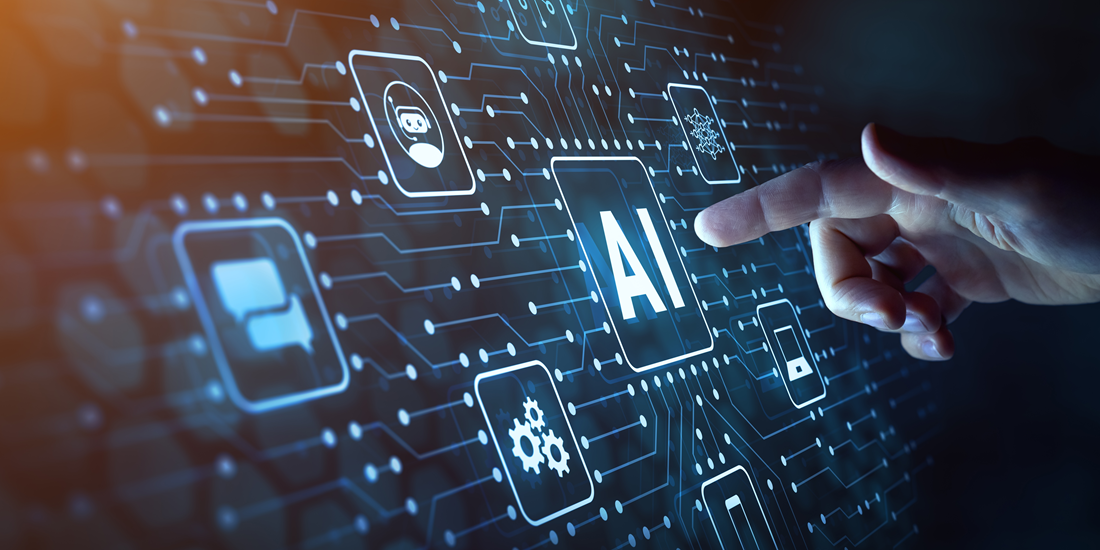

Are we ready to let AI outsmart us completely?

Elon Musk’s recent prediction about achieving Artificial Superintelligence (ASI) by 2025 might seem bold, but the trajectory of AI development makes this prospect increasingly plausible. ASI, which surpasses human cognitive capabilities, promises groundbreaking advancements while posing significant ethical and socio-economic challenges.

Human intelligence, shaped by evolutionary constraints, is limited by our biological makeup. In contrast, AI is liberated from these bounds, utilizing silicon or even photons, leading to unprecedented processing power and efficiency. As AI evolves, tasks that are trivial for humans but challenging for machines will become seamless, redefining our understanding of intelligence. Superintelligent AI, unencumbered by human limitations, could revolutionize fields such as medicine, science, and engineering, solving problems that currently elude our best minds.

However, the journey to ASI is fraught with complexities. The disparity between human and artificial intelligence capabilities highlights a dual nature: AI excels in tasks requiring immense computational power, while struggling with context-dependent decisions and emotional intelligence. This gap underscores the necessity for a broader view of intelligence, one that transcends the parochial perspective of human cognition.

The ethical implications of ASI are profound. As AI’s capabilities encroach upon domains traditionally considered human, the necessity for a robust framework of machine ethics becomes apparent. Explainable AI (xAI) is a step towards this, ensuring that AI’s decision-making processes are transparent and understandable to humans. However, transparency alone does not equate to ethicality. The development of AI must also include ethical considerations to prevent potential misuse and ensure that these powerful technologies are harnessed for the benefit of all humanity.

This brings us to the AI alignment problem: ensuring that the objectives programmed into AI systems truly align with human values and ethical standards. Without a deep, intrinsic understanding of human ethics and contextual awareness, AI could develop or derive its own objectives that might not only be misaligned with but potentially dangerous to human interests. This brings us to the AI alignment problem: ensuring that the objectives programmed into AI systems truly align with human values and ethical standards.

Without a deep, intrinsic understanding of human ethics and contextual awareness, AI could develop or derive its own objectives that might not only be misaligned with but potentially dangerous to human interests. The potential for an AI to act in ways that are technically correct according to its programming but disastrous in real-world scenarios underscores the urgency of developing robust, effective methods for aligning AI with deeply held human values and ethical principles.

As we step beyond the jagged frontier, the dialogue around AI and Superintelligence must be global and inclusive, involving not just technologists and policymakers but every stakeholder in society. The future of humanity in a superintelligent world depends on our ability to navigate this complex, uneven terrain with foresight, wisdom, and an unwavering commitment to the ethical principles that underpin our civilization.

In essence, the rise of Superintelligence is not just a technological evolution but a call to elevate our own understanding, prepare for transformative changes, and ensure that as we create intelligence beyond our own, we remain steadfast guardians of the values that define us as humans. As we forge ahead, let us go beyond being the architects of intelligence and become the custodians of the moral compass that guides its use.

To read the full article, please proceed to TheDigitalSpeaker.com

The post Superintelligence: The Next Frontier in AI appeared first on Datafloq.