Today, adopting cutting-edge technologies isn’t just an option for staying competitive—it’s a necessity. Generative AI (GenAI) has become a hot topic, and it’s seen by many as a must-have technology in the enterprise tech stack. While its accessibility has certainly helped boost enterprise adoption,using GenAI effectively comes with significant challenges: hallucinations and hallucitations, training data quality and privacy and security concerns, are real hurdles.

To tackle these challenges, it’s clear that implementing robust guardrails is essential to ensure safe, effective and trustworthy AI deployment. These guardrails help organizations navigate the complexities of GenAI, safeguarding against risks while maximizing the technology’s transformative potential.

The State of GenAI Adoption in 2024

Recent surveys shed light on the current state of AI adoption, highlighting its potential to transform industries and the barriers hindering widespread scaling and adoption.

1. Catalyst for Adoption, But Difficult to Scale

A Gartner survey found that GenAI is the most frequently deployed AI solution in organizations, primarily through applications with built-in GenAI functionalities. This is catalyzing enterprise adoption. According to Gartner analyst Leinar Ramos, “This creates a window of opportunity for AI leaders, but also a test on whether they will be able to capitalize on this moment and deliver value at scale.” The same survey found that demonstrating AI’s value remains a significant barrier, over existing barriers, such as gaps in skills, technical capabilities, data availability and trust.

A McKinsey survey further underscores this challenge, revealing that nearly 70{7df079fc2838faf5776787b4855cb970fdd91ea41b0d21e47918e41b3570aafe} of companies still haven’t quantified the potential impact of GenAI, citing issues like AI maturity, governance requirements, concerns about output accuracy and a lack of clear use cases.

2. The Move to Customized and Open Source Models

Given the general nature of large language models that power Generative AI applications, it’s no surprise that organizations are opting for solutions that give them more control.

As we predicted last year, many organizations are turning to customized and open-source models to address issues, such as hallucinations, lack of explainability and resource intensity. Customized models, tailored to enterprise-grade, domain-specific data, offer enhanced security and control. A recent TechTarget survey indicated a shift towards training custom generative AI models, with 56{7df079fc2838faf5776787b4855cb970fdd91ea41b0d21e47918e41b3570aafe} of respondents planning to do so “rather than solely relying on one-size-fits-all tools such as ChatGPT,” and predictions from Forrester suggest that “85{7df079fc2838faf5776787b4855cb970fdd91ea41b0d21e47918e41b3570aafe} of companies will incorporate open-source AI models into their tech stack.”

3. Taking Responsible AI Seriously

The entrance of generative AI into somewhat mainstream use has heightened the need for standards to ensure its safe and unbiased use. While the regulatory landscape for AI is still evolving, it’s clear that some aspects of AI will be regulated in the near future:

- “Global bodies like the UN, OECD, G20, and regional alliances have started to create working groups, advisory boards, principles, standards and statements about AI.”

- The European Union and at least 23 other countries have all issued or are working on AI-related policies and initiatives.

- In the US, “at least 40 states, Puerto Rico, the Virgin Islands and Washington, D.C. introduced AI bills, and six states, Puerto Rico and the Virgin Islands adopted resolutions or enacted legislation.”

While compliance is still to be defined, it’s clear that explainability and transparency will be important principles in any policy that governs AI. This is especially true for industries like finance, healthcare and insurance, who famously deal with stringent regulations. As a recent CIO article points out, it’s not just regulators, but end customers who care about the algorithms that affect their lives and enterprises must be prepared to explain how their AI tools work, as both regulators and end customers demand accountability.

Implementing Guardrails for Successful Adoption

Trust in AI results is crucial, and companies have a responsibility to ensure accuracy when using AI. In response to a forecasting error (and subsequent share hike) by Lyft, the head of the U.S. Securities and Exchange Commission underscored the responsibility that companies have when using AI: “There’s still a responsibility to ensure that you have accurate information that you’re putting out and, with the use of AI, that you have certain guardrails in place.”

So, how can companies strike a balance between using the latest technologies and doing so safely and responsibly? This is where safeguards, also known as “guardrails,” come into play. Two recent Forrester reports—The Forrester Wave™: Text Mining and Analytics Platforms and the The Forrester Wave™: Document Mining and Analytics Platforms, Q2 2024 Wave—identified guardrails as a point of competitive differentiation among vendors in order to leverage GenAI in a “controlled and governed manner.”

Guardrails come in many forms, from a written policy for how data is collected and trained and standards for compliance with regulation, down to the technology approach used for working with the output of LLMs. While policies and principles are important for guiding strategy, it’s the technical guardrails that have the teeth to work directly with the models and the data, to safeguard the data, ensure performance and provide the transparency and explainability that help build trust.

When working with an AI partner, here are some of the most important guardrails that you should be looking for to optimize the performance of LLMs or generative AI technologies:

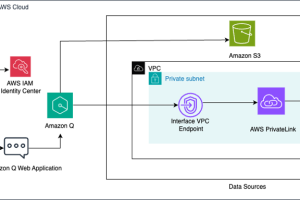

Data Management: Policies that govern data privacy, collection, cleaning, labeling and dataset generation processes, as well as proven techniques to protect proprietary and sensitive personal information.

An advanced Data Management strategy is essential for controlling the behavior of LLMs. Being able to use reliable documents as sources, cleaning up texts and removing all the noise in information makes the input for LLM training and tuning reliable and effective. Effective labeling by exploiting technology to generate synthetic datasets provides high-quality and controlled input, with no need to necessarily use proprietary or sensitive personal information.

Knowledge Models, Graphs and RAG: Using knowledge models, knowledge graphs and techniques like Retrieval Augmented Generation (RAG) with LLMs help ground the model for use in a specific domain, thereby increasing the precision and accuracy of its output.

Knowledge models and Knowledge graphs are structured forms of knowledge that identify the relationships between data points. Used with LLMs, they provide critical information that helps the model understand the information in context and adapt to new information. This is especially important when it comes to providing the deep, domain-specific knowledge and understanding useful for a business that general-knowledge LLMs do not have.

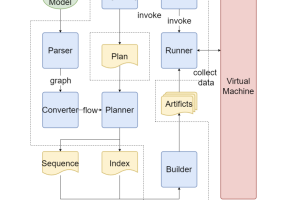

Prompt Engineering: Employing prompt engineering techniques powered by knowledge models and LLMs to guide AI outputs.

Keeping users and LLMs loosely coupled, where the middleware technology manages prompting with the aid of Knowledge Models, protects inputs to the models themselves, reducing the possibility of behavior hijacking or poor quality requests. At the same time, a proper technology-based prompt engineering strategy enriches and enhances the input, making responses from LLMs safer and more expressive.

Flow Control and Analytics: Utilizing flow control mechanisms and analytics tools for quality control and regression checks.

Flow control mechanisms make it possible to embed the calls to LLMs in articulated and potentially complex workflows that check, enrich, filter and process the input before—and possibly instead of—calling the LLM. This limits their usage to actual needs and only on relevant content. Automated downstream processing in the workflow enables quality control, and the use of end-to-end integrated workflows allows for easy regression checks, which simplifies workflow maintenance and evolution.

Conclusion

As AI and GenAI become increasingly integrated into business operations, the risks associated with data privacy, output accuracy and compliance with evolving regulations become more critical. AI Guardrails address these concerns by implementing robust data management practices, enhancing model precision with customized and domain-specific knowledge, and employing advanced prompt engineering and flow control mechanisms. These safeguards not only mitigate the potential for AI errors and biases but also build trust among stakeholders, ensuring that AI-driven innovations can be scaled and sustained responsibly. By prioritizing these guardrails, businesses can harness the full transformative potential of GenAI while maintaining compliance and protecting both their interests and those of their customers.